Towards the end of the Fall 2018 semester, I found out that one of the ABET learning goals for the Numerical Methods course is the ability to select and use software tools, based on their numerical methods, for a range of problems. Unfortunately, none of the controlled assessments for the course (quizzes and final exam) included questions in which I could measure this achievement.

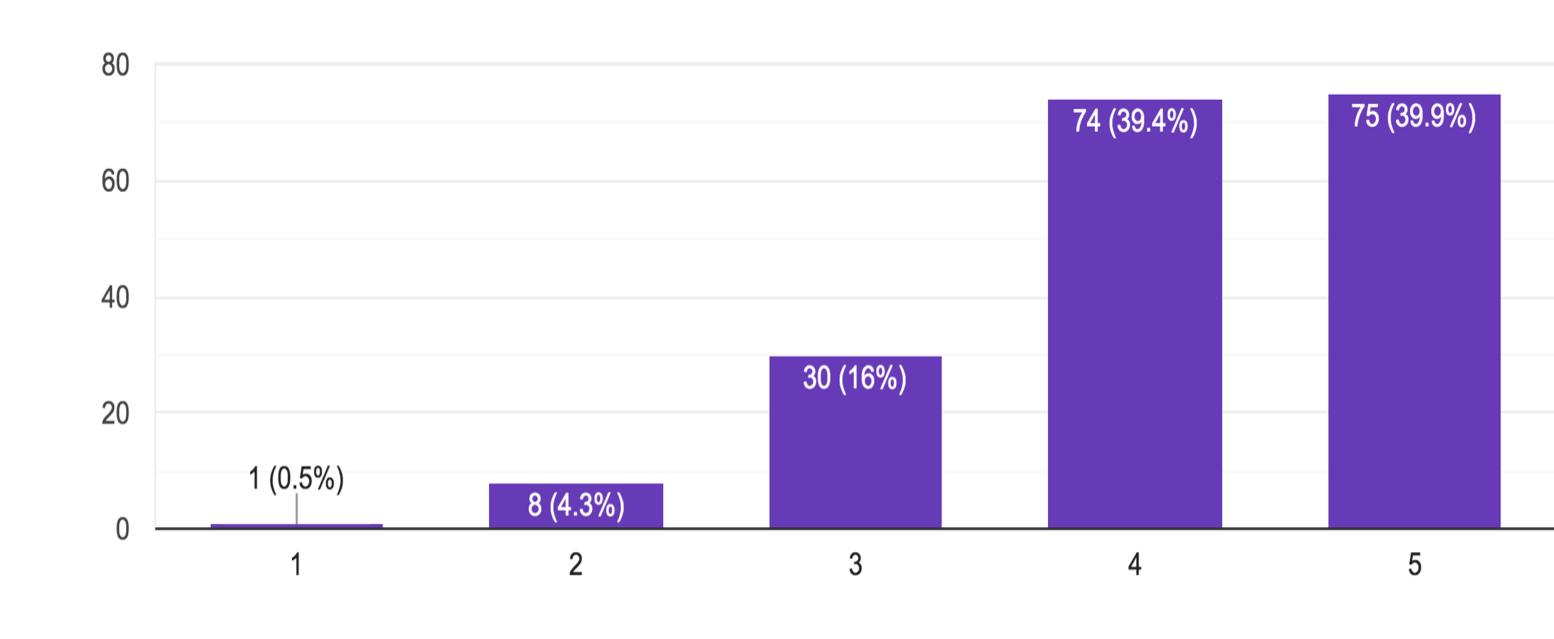

For that reason, I decided to offer a last-minute bonus quiz during the last week of classes, prepared exclusively to evaluate this ABET learning objective. The quiz consisted of three short-coding questions, where students had to solve a real application of numerical methods. These type of questions appeared in every weekly homework (HW), but not in quizzes. Students found out about this bonus opportunity two days before the quiz, and about 78% decided to take it. The average of the quiz was extremely low (44%) and only 66% of the students completed at least two of the questions correctly. Despite the low average, the student responses to a survey indicated that they think this type of assessment is important, as shown in Fig.1. They also believe they would have done better in this assessment if they had more similar quizzes during the semester.

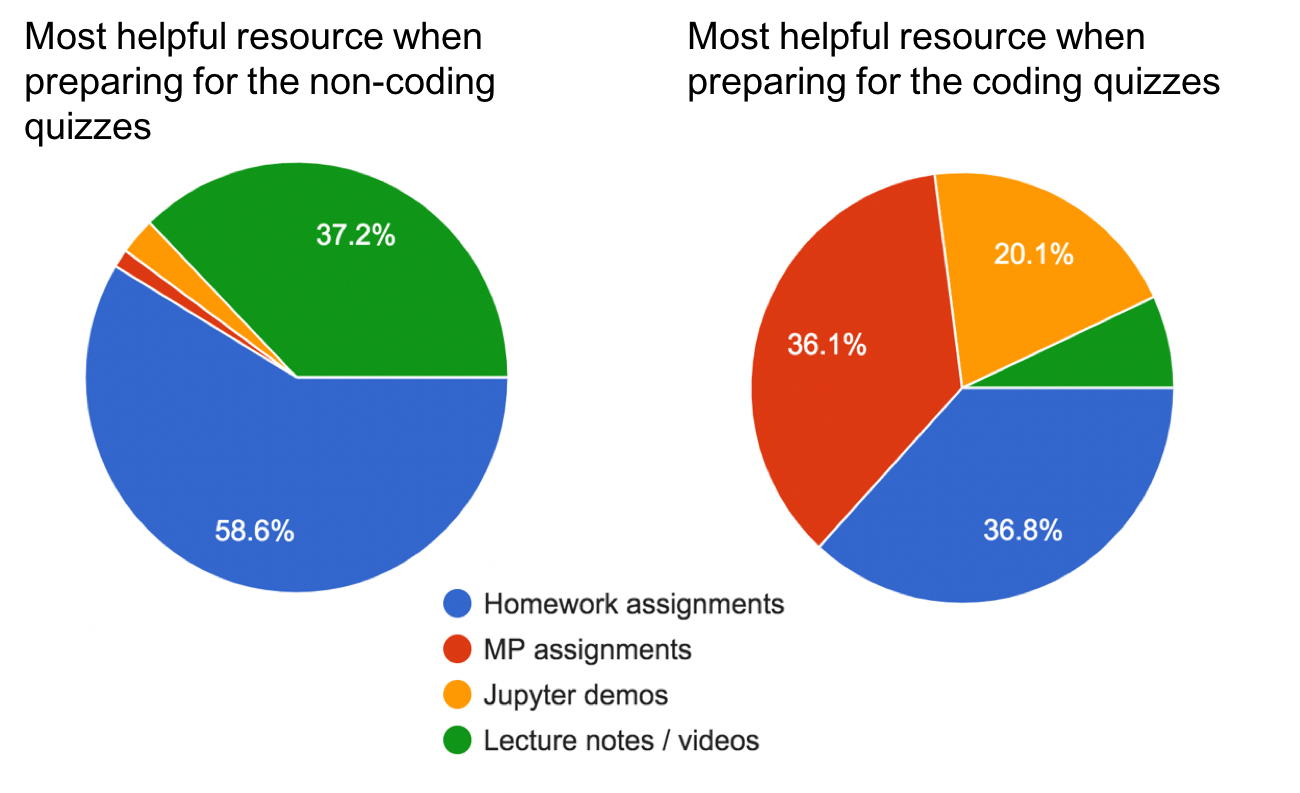

In Spring 2019, I added two short-coding quizzes during the semester (in addition to the already existing non-coding quizzes), and kept the same Maching Problems (MPs) and coding HWs as learning opportunities. The coding quiz averages were very high (86% and 90%). Two of the coding questions used in Fall 2018 were included in the final exam for Spring 2019. One question had the average increased from 46% to 73%, and the other question had average increased from 41% to 60%. The average of the other non-coding quizzes was not significantly different among these two semesters. One hypothesis to explain this average increase is that students didn’t consider MPs and HW coding questions as “important” in Fall 2018, since they were not expecting to have assessments following that format. When completing HW in Fall 2018, the only motivation was the immediate grade corresponding to that assessment, with no long term motivation for learning. In Spring 2019, students were aware they would have quizzes testing their programming knowledge, and hence, they started using the MPs and coding examples as learning tools. This hypothesis is supported by survey responses from Spring 2019, illustrated in Fig.2.

Examples of non-coding and coding questions implemented via PrairieLearn.